InfiniBand Networking Solutions

Complex workloads require ultra-fast processing of high-resolution simulations, extremely large datasets, and highly parallelized algorithms. As these computing needs continue to grow, NVIDIA Quantum InfiniBand - the world's only fully offloadable, In-Network Computing platform - provides the significant improvement in performance necessary to achieve unparalleled performance in high-performance computing (HPC), AI, and hyperscale cloud infrastructures with less cost and complexity.

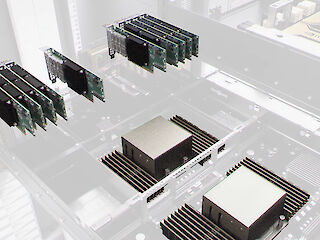

The NVIDIA InfiniBand Variety

InfiniBand Adapters

InfiniBand host channel adapters (HCAs) offer ultra-low latency, high throughput, and innovative NVIDIA In-Network Computing engines to provide the acceleration, scalability, and feature-rich technology required for today's modern workloads.

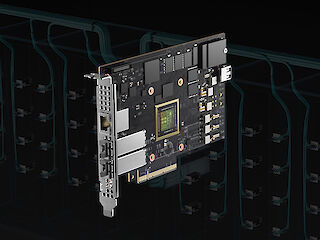

Data Processing Units (DPUs)

The NVIDIA BlueField® DPU combines powerful computing, fast networking, and extensive programmability to offer software-defined, hardware-accelerated solutions for the most demanding workloads. From accelerated AI and scientific computing to cloud-native supercomputing, BlueField redefines what is possible.

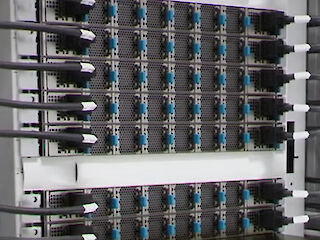

InfiniBand Switches

InfiniBand switch systems provide the highest performance and port density available. Innovative capabilities such as NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ and advanced management features such as self-healing network capabilities, quality of service, enhanced virtual lane mapping, and NVIDIA In-Network Computing acceleration engines provide a performance boost for industrial, AI, and scientific applications.

Routers and Gateway Systems

InfiniBand systems offer the highest scalability and subnet isolation using InfiniBand routers, and InfiniBand to Ethernet gateway systems. The latter is used to create a scalable and efficient way to connect InfiniBand data centers to Ethernet infrastructures.

Long-Haul Systems

NVIDIA MetroX® long-haul systems can seamlessly connect remote InfiniBand data centers, storage, and other InfiniBand platforms. They can extend the reach of InfiniBand up to 40 kilometers, enabling native InfiniBand connectivity between remote data centers or between data centers and remote storage infrastructures for high availability and disaster recovery.

Cables and Transceivers

LinkX® cables and transceivers are designed to maximize the performance of HPC networks, requiring high-bandwidth, low-latency, and highly reliable connections between InfiniBand elements.

Software for the Best Performance

MLNX_OFED

OpenFabrics Alliance's OFED, tested by NVIDIA as MLNX_OFED, optimizes high-performance input/output.

HPC-X

The NVIDIA HPC-X is a comprehensive MPI and SHMEM/PGAS software suite, using InfiniBand In-Network Computing and acceleration engines.

UFM

The NVIDIA Unified Fabric Manager (UFM) allows data center administrators to manage, monitor, and troubleshoot InfiniBand networks.

Magnum IO

NVIDIA Magnum IO simplifies and speeds up data movement, access, and management for multi-GPU, multi-node systems, using network IO, In-Network Computing, storage, and IO management.

InfiniBand-Enhanced Capabilities

In-Network Computing

NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) offloads collective communication operations to the switch network, reducing data transmission and increasing the efficiency of Message Passing Interface (MPI) operations.

Self-Healing Network

InfiniBand with self-healing network capabilities quickly recovers from link failures, with recovery 5,000 times faster than other software-based solutions.

Quality of Service

InfiniBand is the only high-performance interconnect solution with advanced quality-of-service capabilities, such as congestion control and adaptive routing, for efficient networking.

Network Topologies

InfiniBand supports centralized management and any topology, including Fat Tree, Hypercubes, Torus, and Dragonfly+. Routing algorithms optimize performance for specific application communication patterns.

Contact AMBER now!

AMBER brings you high-performance, end-to-end networking to scientific computing, AI, and cloud data centers.