Performance vs. Energy Consumption: How Hardware Dominates AI's Energy Balance

Thursday, August 15, 2024 General Info

ChatGPT by OpenAI has taken the working world by storm in recent months. Thanks to Artificial Intelligence (AI) and Natural Language Processing (NLP), this technology has become widely accessible almost overnight. However, with increased usage, critical voices have also grown louder: hallucinating AI, inaccurate fact reproduction, copyright issues, and energy consumption.

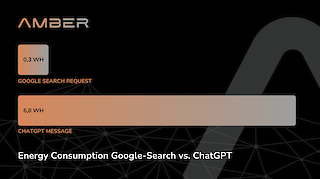

Energy consumption is particularly interesting as it often goes unnoticed. While a Google search consumes about 0.0003 kilowatt-hours, a chat message to ChatGPT requires approximately 22 times more, at 0.0068 kilowatt-hours. These figures clearly indicate that even the less obvious aspects of AI usage must be considered in overall evaluations.

2012: 1000x More Energy with 30% Less Graphics Performance with Titan

Optimizable energy consumption of AI use cases can be seen even outside of internet research. One reason is outdated hardware. When we are tasked with updating AI infrastructure for clients, we notice that the efficiency is significantly worse compared to current hardware. This might be obvious, but the discrepancy in energy consumption is mind blowing.

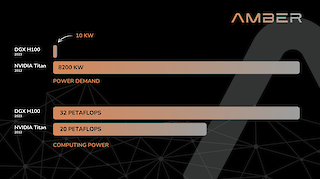

In recent years, hardware has improved so much that the difference becomes quickly apparent. The potential is easily measured when considering that in 2012, NVIDIA's GPU cluster called Titan achieved 20 petaflops of graphics performance, consuming 8200kW and costing about 130 million USD. NVIDIA's current DGX hardware, based on the H100 chip, consumes only 10kW (0.12% of Titan) for 50% more graphics performance and costs about 400,000 USD (0.3% of Titan). The impact of using modern hardware on sustainability can thus be easily imagined.

How Can AI's Potential Be Fully Utilized Without Sacrificing Sustainability?

One solution is AI Factories. Wait – what are AI Factories?

AI Factories might be a new term to some. Generally, AI Factories refer to specialized server infrastructure designed solely to meet the enormous demands of AI models, making them particularly energy-efficient.

NVIDIA offers exactly this with its DGX platform. The analogy of a racing car is relevant here: the strongest engine—the GPU—can only deliver full performance if the right transmission—the internal network—is used, along with properly sized tires—the AI storage—to handle the full power. NVIDIA's development of the InfiniBand network standard into a high-performance standard has largely gone unnoticed. This makes sense when considering the massive data volumes used today to train AI models, which would overwhelm traditional Ethernet solutions.

Properly Sized Storage Systems Are Essential for AI

Where to store all the data? In storage solutions, of course. The same trend seen with network components applies here. Without storage solutions that can handle the high volume of I/O operations, AI initiatives fail sooner than most realize. The ever-growing data volume plays a leading role in requirements. It's advisable, and often necessary, to use modern storage solutions. For high-resolution video material, for example, the NVME storage of DGX systems is simply insufficient for buffering all the data. Fortunately, other hardware manufacturers like IBM have recognized this gap and offer solutions that integrate seamlessly into the AI workloads of AI Factories.

High Energy Consumption: Bad for the Environment, Bad for the Bottom Line

We've already highlighted the sustainability aspect of high energy consumption, but increased AI usage also has a practical aspect: it costs money. Germany, for example, has relatively high electricity costs. Energy-hungry AI quickly becomes expensive. Therefore, using modern hardware is a significant leverage point, aside from the time gains from better performance. This also has a financial benefit since data scientists are well-paid professionals. If they can accomplish more in less time, the ROI calculation is simple.

Specialized AI Networks: Efficient and Effective

However, not everything has to be larger and more comprehensive when it comes to AI models. To resolve the ongoing conflict between a model's capabilities and energy consumption/performance, a new approach has gained momentum: specialized AI networks. Think of the endless possibilities of ChatGPT. It can write poems, scientific texts, summarize articles, and more, regardless of the topic. These capabilities are often and gladly used by millions of users worldwide. This requires a model trained on vast amounts of data, which impacts performance.

What if your application doesn't need the level of capabilities provided by a large LLM? The logical step: use a smaller, more performant, and cost-effective model. This approach is increasingly seen. Carefully chosen parameters in the AI model can handle specific use cases just as well as larger models, provided the application stays within predefined boundaries. This is crucial for running local GenAI applications on mobile devices like smartphones. Apple demonstrates this with iOS18 and the latest iPhones: basic applications can run locally on the device, while more complex cases are handled in an Apple hardware-based cloud environment. This creates a practical example of specialized AI models.

NVIDIA Blackwell: Breaking Moore's Law

NVIDIA's latest GPU generation is fascinating, especially in terms of numbers: 208 billion transistors are used in the flagship chip. This breaks the limit predicted by Moore's Law: doubling the transistor count every two years. Performance-wise, the new chip surpasses the current benchmark—NVIDIA's Hopper platform—by five times, achieving 20 petaflops. This is the same performance NVIDIA's Titan cluster achieved in 2012.

Of course, the Blackwell chips will also be integrated into NVIDIA's DGX systems. Due to the enormous data volumes, a dedicated NV-Link switch was developed to manage communication between multiple GPUs within a DGX system. New network switches, also part of the DGX system, are provided for the same reason. Interestingly, the highest-performance version, the GB200 NVL72, uses water cooling.

Conclusion: High Performance with High Energy Awareness

We know it ourselves: the AI market is currently so dynamic that it's easy to lose track. However, we must not lose sight of sustainability. The resource demands of modern GenAI applications will continue to be a challenge. Fortunately, as the efficiency of AI models increases, so does the efficiency of the hardware used. Simply replacing old hardware offers enormous savings potential. Combined with new approaches like specialized AI networks or immersion cooling, we are on the right track.